REU Site: Collaborative Human-Robot Interaction

Abstract: Human-Robot Interaction (HRI) has the potential to affect several real world domains such as hospitals, homes, schools, offices, or infrastructure sites where robots are uniquely able to assist a human team member to achieve a common goal. However, there are many challenges for effective human-robot collaboration that impinge on this promise, such as communicating with human partners using natural language, providing information to an operator through a visual interface, or quickly providing information so that a person is able to offer assistance. We propose an HRI direction that provides several closely-related projects for undergraduate research experiences. The project team will mentor 10 undergraduates each summer, pursuing research directly impacting assistive robotics, in the following technical areas: development of autonomous robot capabilities targeted for care environments, such as networked robotics devices cooperating with care professionals to perform complex tasks in support of aging in place; human command-and-control infrastructure for operations that utilize robots for technology support; and connectivity and security for a network of robots, computers, and embedded devices collectively used to mission-critical goals while utilizing unused parts of the wireless spectrum. The goal of this Research Experiences for Undergraduates (REU) site is to develop and evaluate robotic systems whose function is to help bridge the human-robot collaboration gap and achieve the above objectives efficiently and effectively. The summer activities for undergraduates will provide hands-on science and engineering activities related to current research projects, and professional development with training sessions on writing a graduate school application and how to apply for fellowships to support graduate education. The site will develop new autonomous robot capabilities and supporting network and data science technology to address real-world challenges of operating autonomous systems in hospital, clinic, home, and infrastructure environments. This site will develop solutions for semi-autonomous robot behavior with humans in the loop, wireless network connections in rapidly changing frequency domains, and processing high volumes of real-world data. The proposed site presents five projects related to these computing domains: Empirical Study of Socially-Appropriate Interaction; Language-Based Human-Robot Collaboration; Autonomous Robotic Exploration of Underground Mine Environments; Multi-robot Collaboration and Human in-the-loop for Safe, Accurate and Reliable Inspection; and Network Management of Heterogeneous Robotics Devices in a Wireless Environment. This REU site links these projects together through the common objective of developing assistive technology. Students will develop domain knowledge, mathematical skills, and interdisciplinary competency. The possible intellectual properties resulting from this project will include study on long-term human-robot interaction, wireless networking for challenging signal environments, autonomous robot capabilities for human-robot teaming, and data science tools for processing high volumes of data from real-world systems.

Details

- Organization: National Science Foundation

- Award #: IIS-1757929

- Amount: $360,000

- Date: Feb. 1, 2018 - Jan. 31, 2022

- PI: Dr. David Feil-Seifer

- Co-PI:

Dr. Shamik Sengupta

Supported Publications

- Valentine, W., Webb, M., Collum, C., Feil-Seifer, D., & Hand, E. HCC: An explainable framework for classifying discomfort from video. To Appear in Proceedings of the International Symposium on Visual Computing (ISVC), Lake Tahoe, NV, USA, Oct 2024. ( details ) ( .pdf )

- Arora, P., Alcolea Vila, P., Borghese, A., Carlson, S., Feil-Seifer, D., & Papachristos, C. UAV-based Foliage Plant Species Classification for Semantic Characterization of Pre-Fire Landscapes. In Proceedings of the International Conference on Unmanned Aircraft Systems (ICUAS), Chania - Crete, Greece, IEEE. Jun 2024. IEEE. ( details ) ( .pdf )

- Becker, T., Jiang, S., Feil-Seifer, D., & Nicolescu, M. Single Robot Multitasking through Dynamic Resource Allocation. In 2023 IEEE-RAS 22nd International Conference on Humanoid Robots (Humanoids), page 1-8, IEEE. Jan 2024. IEEE. ( details ) ( .pdf )

- Salek Shahrezaie, R., Manalo, B. L., Brantley, A., Lynch, C., & Feil-Seifer, D. Advancing Socially-Aware Navigation for Public Spaces . In International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, IEEE. Aug 2022. IEEE. ( details ) ( .pdf )

- Lupton, B., Zappe, M., Thom, J., Sengupta, S., & Feil-Seifer, D. Analysis and Prevention of Security Vulnerabilities in a Smart City. In Computing and Communication Workshop and Conference, Las Vegas, NV, USA, IEEE (best paper winner). Jan 2022. IEEE (best paper winner). ( details ) ( .pdf )

- Banisetty, S., Rajamohan, V., Vega, F., & Feil-Seifer, D. A Deep Learning Approach To Multi-Context Socially-Aware Navigation. In International Conference on Robot and Human Interactive Communication (RO-MAN), page 23-30, Vancouver, BC, Canada, Aug 2021. ( details ) ( .pdf )

- Farlessyost, W., Grant, K., Davis, S., Hand, E., & Feil-Seifer, D. The Effectiveness of Multi-Label Classification and Multi-Output Regression in Social Trait Recognition. In Sensors, 21(12), Jun 2021. ( details ) ( .pdf )

- Honour, A., Banisetty, S., & Feil-Seifer, D. Perceived Social Intelligence as Evaluation of Socially Navigation. Boulder, CO, USA., ACM Human-Robot Interaction Companion. Mar 2021. ACM Human-Robot Interaction Companion. ( details ) ( .pdf )

- Blankenburg, J., Zagainova, M., Simmons, M. S., Talavera, G., Nicolescu, M., & Feil-Seifer, D. Human-Robot Collaboration and Dialogue for Fault Recovery on Hierarchical Tasks. In International Conference on Social Robotics (ICSR), CO, Oct 2020. ( details ) ( .pdf )

- Palmer, A., Peterson, C., Blankenburg, J., Nicolescu, M., & Feil-Seifer, D. Simple Camera-to-2D-LiDAR Calibration Method for General Use. In International Conference on Visual Computing (ISVC), Virtual, Oct 2020. ( details ) ( .pdf )

- Banisetty, S., Multi-Context Socially-Aware Navigation Using Non-Linear Optimization. University of Nevada Reno, May 2020. ( details ) ( .pdf )

- Ceccarelli, N., Regis, P., Sengupta, S., & Feil-Seifer, D. Optimal UAV Positioning for a Temporary Network Using an Iterative Genetic Algorithm. In Wireless and Optical Communications Conference, Newark, NJ, USA, USA, May 2020. ( details ) ( .pdf )

- Helm, M., Santos, C. J., Denecker, L., Huang, Y., Barchard, K., Lapping-Carr, L., Westfall, S., & Feil-Seifer, D. What the factors(s)!?: Perceptions of social intelligence in robots. Poster Paper in Western Psychological Association Convention, San Francisco, CA, Apr 2020. ( details )

- Le, C., Pham, A., La, H., & Feil-Seifer, D. A Multi-Robotic System for Environmental Dirt Cleaning. In Proceedings of the IEEE/SICE International Symposium on System Integration (SII), Honolulu, Hawaii, Jan 2020. IEEE/SICE, . ( details ) ( .pdf )

- Ruetten, L., Regis, P., Sengupta, S., & Feil-Seifer, D. Area-Optimized UAV Swarm Network for Search and Rescue Operations. In Proceedings of the Computing and Communication Workshop and Conference (CCWC), Las Vegas, NV, IEEE (best paper winner). Jan 2020. IEEE (best paper winner). ( details ) ( .pdf )

- Nicolescu, M., Arnold, N., Blankenburg, J., Feil-Seifer, D., Banisetty, S., Nicolescu, M., Palmer, A., & Monteverde, T. Learning of Complex-Structured Tasks from Verbal Instruction. In International Conference on Humanoid Robots, Toronto, Canada, Oct 2019. ( details ) ( .pdf )

- Sturgeon, S., Palmer, A., Blankenburg, J., & Feil-Seifer, D. Perception of Social Intelligence in Robots Performing False-Belief Tasks. In International Symposium on Robot and Human Interactive Communication (RO-MAN), New Delhi, India, IEEE. Oct 2019. IEEE. ( details ) ( .pdf )

- Fite, R., Khattak, S., Feil-Seifer, D., & Alexis, K. History-Aware Free Space Detection for Efficient Autonomous Exploration using Aerial Robots. In IEEE Aerospace Conference, Big Sky, Montana, IEEE. Mar 2019. IEEE. ( details ) ( .pdf )

- Brodeur, T., Regis, P., Feil-Seifer, D., & Sengupta, S. Search and Rescue Operations with Mesh Networked Robots. In IEEE Annual Ubiquitous Computing, Electronics & Mobile Communication Conference (IEEE UEMCON), New York City, NY, IEEE. Nov 2018. IEEE. ( details ) ( .pdf )

Supported Projects

Improving People Detecting Infrastructures for the Purpose of Modeling Human Behavior June 3, 2019 - Present

We need to model human behavior for a better understanding of how robots can behave in crowds. To do that, we can to use lasers to track people’s movements during different social interactions. We have developed a system to enable multiple 2-dimensional, stationary lasers to capture movements of people within a large room during social events. With calibrated lasers, we tracked a moving person’s ankles and predicted his/her path using linear regression. We were also able to overlap all laser fields and convert them into a single point cloud. After comparing the point cloud over five time frames, we correctly detected movement and found ankles in the point cloud with our circle detection algorithm. Using the locations of the tracked ankles, we used linear regression to accurately predict the path of the person. Although the positioning is not perfect, the overall shape of the predicted path resembles the actual path taken by the person. We hope that others will use this system to better understand and model human behavior.

We need to model human behavior for a better understanding of how robots can behave in crowds. To do that, we can to use lasers to track people’s movements during different social interactions. We have developed a system to enable multiple 2-dimensional, stationary lasers to capture movements of people within a large room during social events. With calibrated lasers, we tracked a moving person’s ankles and predicted his/her path using linear regression. We were also able to overlap all laser fields and convert them into a single point cloud. After comparing the point cloud over five time frames, we correctly detected movement and found ankles in the point cloud with our circle detection algorithm. Using the locations of the tracked ankles, we used linear regression to accurately predict the path of the person. Although the positioning is not perfect, the overall shape of the predicted path resembles the actual path taken by the person. We hope that others will use this system to better understand and model human behavior.

Understanding First Impressions from Face Images June 3, 2019 - March 31, 2020

This project investigated how first impressions are expressed in faces through the automated recognition of these social traits from face images. First impressions are universally understood to have three dimensions: trustworthiness, attractiveness and competence. All three of these social traits have strong implications for evolution. We form first impressions of sometime within 100ms of meeting them, which is less time than it takes to blink. The first impressions we form are composed of judgements of these three social traits. This project aimed to answer the question: “Can automated methods accurately recognize social traits from images of faces?” Two main challenges are associated with the above research question, and both are related to the data available for the problem. First impressions are not something that can be objectively measured, at this point in time, and so labels for first impressions come from subjective human ratings of faces. Additionally, only one dataset is available for this problem and it has roughly 1,100 images. Training a state-of-the-art machine learning algorithm to accurately and reliably recognize social traits from face images using only 1,100 images each labeled with subjective human ratings is nearly impossible. Student involvement: Two REU participants investigated a method for combining multiple datasets from different domains with partial labels for the problem of social trait recognition. Using this combined dataset, the students were able to train a model capable of recognizing social traits with improved accuracy over the baseline approach.

Socially-Aware Navigation Sept. 1, 2017 - Present

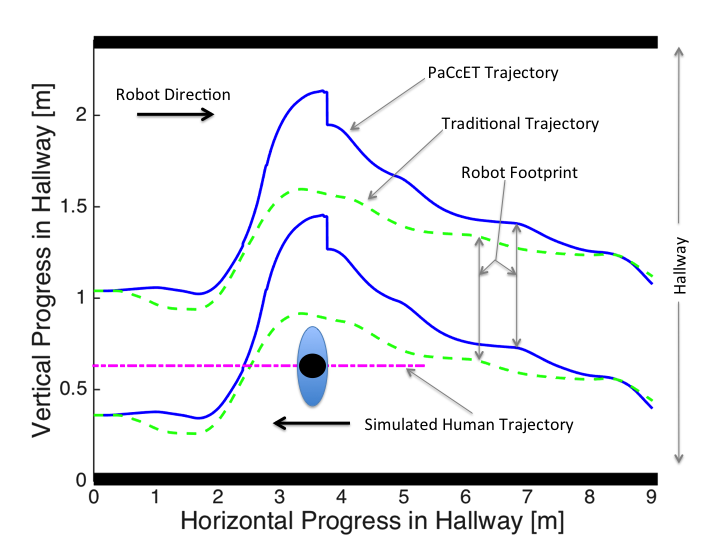

As robots become more integrated into people's daily lives, interpersonal navigation becomes a larger concern. In the near future, Socially Assistive Robots (SAR) will be working closely with people in public environments. To support this, making a robot safely navigate in the real-world environment has become for active study. However, for robots to effectively interact with people, they will need to exhibit socially-appropriate behavior as well. The real-world environment is full of unpredictable events; the potential social cost of a robot not following social norms becomes high. Robots that violate these norms risk being isolated and falling out of use, or even being mistreated by their human interaction partners.

The focus of this work is to develop a people-aware navigation planner to handle a larger range of person-oriented navigation behaviors utilizing a multi-modal distribution model of human-human interaction. Interpersonal distance models based on features which could be detected real-time using on-board robot sensors can be used to discriminate between a set of human actions and provide a social appropriateness score for a potential navigation trajectory. These models are used to weigh the trajectories and select the most appropriate action for the given situation. This robot navigation mimics the adherence to social norms while simultaneously adhering to a stated navigation goal as well. The goal of this system is to sense interpersonal distance and choose a trajectory that jointly optimizes for both social-appropriateness and goal-orientedness.

As robots become more integrated into people's daily lives, interpersonal navigation becomes a larger concern. In the near future, Socially Assistive Robots (SAR) will be working closely with people in public environments. To support this, making a robot safely navigate in the real-world environment has become for active study. However, for robots to effectively interact with people, they will need to exhibit socially-appropriate behavior as well. The real-world environment is full of unpredictable events; the potential social cost of a robot not following social norms becomes high. Robots that violate these norms risk being isolated and falling out of use, or even being mistreated by their human interaction partners.

The focus of this work is to develop a people-aware navigation planner to handle a larger range of person-oriented navigation behaviors utilizing a multi-modal distribution model of human-human interaction. Interpersonal distance models based on features which could be detected real-time using on-board robot sensors can be used to discriminate between a set of human actions and provide a social appropriateness score for a potential navigation trajectory. These models are used to weigh the trajectories and select the most appropriate action for the given situation. This robot navigation mimics the adherence to social norms while simultaneously adhering to a stated navigation goal as well. The goal of this system is to sense interpersonal distance and choose a trajectory that jointly optimizes for both social-appropriateness and goal-orientedness.

Distributed Control Architecture for Collaborative Multi-Robot Task Allocation March 1, 2016 - Present

Real-world tasks are not only a series of sequential steps, but typically exhibit a combination of multiple types of constraints. These tasks pose significant challenges, as enumerating all the possible ways in which the task can be performed can lead to large representations and it is difficult to keep track of the task constraints during execution. Previously we developed an architecture that provides a compact encoding of such tasks and validated it in a single robot domain. We recently extended this architecture to address the problem of representing and executing tasks in a collaborative multi-robot setting. Firstly, the architecture allows for on-line, dynamic allocation of robots to various steps of the task. Secondly, our architecture ensures that the collaborative robot system will obey all of the task constraints. Thirdly, the proposed architecture allows for opportunistic and flexible task execution given different environmental conditions. We demonstrated the performance of our architecture on a team of two humanoid robots (a Baxter robot and a PR2 robot) performing hierarchical tasks. Further extensions of this architecture are currently being explored.

Due to the adaptability of our multi-robot control architecture, this work has become the foundation for several ongoing extensions. The first extension is to modify the architecture to allow for a human to collaborate with the multi-robot team in order to complete the joint task. To allow the human and robots to communicate, this work augments the control architecture with a third robot “brain” which utilizes intent recognition to determine which objects the human is interacting with and updates the state of the task held by the other robots accordingly. Secondly, this architecture is being adapted to allow a human to train a robot to complete a task through natural speech commands. The multi-robot team is then able to complete the newly trained task. The third extension focuses on learning generalized task sequences utilizing the types of hierarchical constraints defined by this control architecture. The last extension to this work is to modify the architecture to allow heterogenous grasp affordances for each item in the task. These affordances allow the robots to incorporate how well they can grasp a specific object based on their gripper and arm mechanics into the task allocation architecture. This allows robots to be assigned tasks which they are better suited for. This work is currently being completed and the aim is to submit this work to DARS 2018. Each of these extensions emphasize the generalizability of this control architecture and together illustrate the vast applications in which further extensions of this architecture can be adapted to.

Real-world tasks are not only a series of sequential steps, but typically exhibit a combination of multiple types of constraints. These tasks pose significant challenges, as enumerating all the possible ways in which the task can be performed can lead to large representations and it is difficult to keep track of the task constraints during execution. Previously we developed an architecture that provides a compact encoding of such tasks and validated it in a single robot domain. We recently extended this architecture to address the problem of representing and executing tasks in a collaborative multi-robot setting. Firstly, the architecture allows for on-line, dynamic allocation of robots to various steps of the task. Secondly, our architecture ensures that the collaborative robot system will obey all of the task constraints. Thirdly, the proposed architecture allows for opportunistic and flexible task execution given different environmental conditions. We demonstrated the performance of our architecture on a team of two humanoid robots (a Baxter robot and a PR2 robot) performing hierarchical tasks. Further extensions of this architecture are currently being explored.

Due to the adaptability of our multi-robot control architecture, this work has become the foundation for several ongoing extensions. The first extension is to modify the architecture to allow for a human to collaborate with the multi-robot team in order to complete the joint task. To allow the human and robots to communicate, this work augments the control architecture with a third robot “brain” which utilizes intent recognition to determine which objects the human is interacting with and updates the state of the task held by the other robots accordingly. Secondly, this architecture is being adapted to allow a human to train a robot to complete a task through natural speech commands. The multi-robot team is then able to complete the newly trained task. The third extension focuses on learning generalized task sequences utilizing the types of hierarchical constraints defined by this control architecture. The last extension to this work is to modify the architecture to allow heterogenous grasp affordances for each item in the task. These affordances allow the robots to incorporate how well they can grasp a specific object based on their gripper and arm mechanics into the task allocation architecture. This allows robots to be assigned tasks which they are better suited for. This work is currently being completed and the aim is to submit this work to DARS 2018. Each of these extensions emphasize the generalizability of this control architecture and together illustrate the vast applications in which further extensions of this architecture can be adapted to.

Methods For Facilitating Long-Term Acceptance of a Robot July 1, 2013 - Present

Scenarios that call for lt-HRI, such as home health care and cooperative work environments, have interesting social dynamics, even before robots are introduced. The success of human-only teams can be limited by the trust and willingness to collaborate within the team, consideration is worthy of factors that may affect adoption of robots in long-term settings. A coworker who is unwilling to collaborate and trust the abilities of their fellow teammate may refuse to include that teammate in many job related tasks due to territorial behaviors. Exclusion of a robotic team member might eventually lead to a lack of productivity in lt-HRI. Behavior toward a robot companion may be governed, in part, by pre-conceived notions about robots. However, prior work has shown that simple team-building exercises can positively affect attitudes toward a robot, even if the robot under-performs at a task. This objective will address these issues by adapting and evaluating facilitation strategies that have been successful in encouraging the adoption of new technology or improving team cohesion. We will study how these facilitation techniques can bolster human-robot cooperation.