Fast Detection of Partially Occluded Humans from Mobile Platforms

Abstract: In this proposal, we outline a methodology capable of detecting people poses given far from ideal circumstances for accurate pose detection. In addition, we aim for a resulting algorithm requiring little-enough CPU power to operate on a low-power device such as a cell-phone. Recent years have seen tremendous advances in camera systems that capture both color and depth information from their environments. However, despite some initial promise in the development of algorithms built on these new cameras, there has been little significant progress in the development of person detection and tracking using color and depth over the same time period. In fact, any successful methods of person detection rely on assumptions, such as a body orientation upright to the camera, and a view of the person free of occlusion. Systems built on the state of the art routinely encounter difficulties tracking people in cluttered scenes where occlusion is unavoidable. There is still significant room to improve the accuracy, speed, and robustness of person detection using convolutional neural networks and deep learning. This directly applies to NASA goals of human-robot cooperation in planetary exploration settings by removing assumptions that are simply not true in zero-G or even cluttered terrestrial settings. We propose a project of stated interest for collaboration with the Intelligent Robotics Group (IRG) at NASA Ames. Our project will support and complement on-going efforts of the IRG to develop effective human-robot communication in the full range of planetary exploration environments.

Details

- Organization: NASA Space Grant Consortium

- Award #: NSHE-15-40

- Amount: $30,000

- Date: July 1, 2014 - Feb. 29, 2016

- PI: Dr. David Feil-Seifer

- Co-PI:

Dr. Richard Kelley

Supported Publications

- Frank, D. & Feil-Seifer, D. Fast Detection of Partially Occluded Humans from Mobile Platforms: Preliminary Work. Poster Paper in Nevada NASA EPSCOR and Space Grant Consortium Annual Meeting, Apr 2014. ( details ) ( .pdf )

Supported Projects

Partially Occluded Person Pose Detection July 1, 2014 - Dec. 31, 2016

Detecting humans is a common need in human-robot interaction. A robot working in proximity to people often needs to know where people are around it and may also need to know what the pose, i.e. location of arms, legs, of the person. Attempting to solve this problem with a monocular camera is difficult due to the high variability in the data; for example, differences in clothes and hair can easily confuse the system and so can differences in scene lighting. A common example of a pose detection system is the Microsoft Kinect. The Kinect uses depth images as input for the pose classifier. In a depth image, each pixel has the distance to the nearest obstacle. With the Kinect, users can play games just by moving, since the Kinect can detect their pose; however, the Kinect pose detector is optimized to be used for entertainment. It assumes that the view of the person is completely unobstructed and that the person is standing upright in the frame.

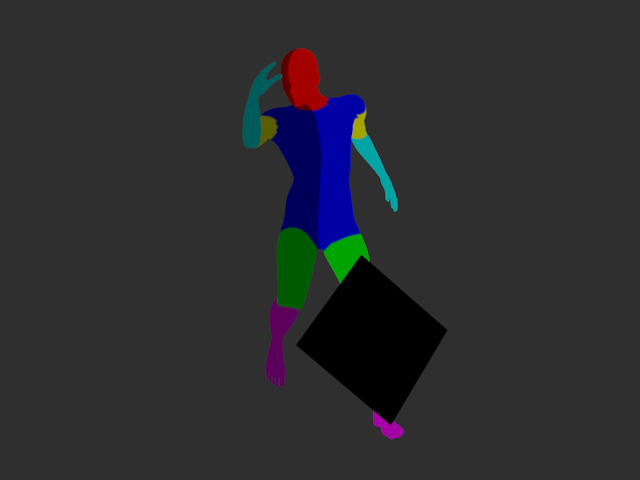

The goal of this project is to create a robust system for person detection. This system will be able to handle detection even when a person is partially occluded by obstacles. It will also make no assumptions about the position of the camera relative to the person. By removing the position assumption, the system will function even when running on a mobile platform, such as a UAV, that may be above or behind the person. The system functions in two stages. In the first stage, the system takes depth images and uses this information to classify every pixel in the image as a certain body part, or non-person, to produce a mask image. This output gives a 2-D view of the scene that shows where each body part is in the image. There are many different techniques for producing the mask image, evaluating the effectiveness of each is one step of the project. In the second stage, the depth data will be combined with the mask image to produce a 3-D representation of the person. That representation will be used to find the position of each body part of interest in 3-D space.

Detecting humans is a common need in human-robot interaction. A robot working in proximity to people often needs to know where people are around it and may also need to know what the pose, i.e. location of arms, legs, of the person. Attempting to solve this problem with a monocular camera is difficult due to the high variability in the data; for example, differences in clothes and hair can easily confuse the system and so can differences in scene lighting. A common example of a pose detection system is the Microsoft Kinect. The Kinect uses depth images as input for the pose classifier. In a depth image, each pixel has the distance to the nearest obstacle. With the Kinect, users can play games just by moving, since the Kinect can detect their pose; however, the Kinect pose detector is optimized to be used for entertainment. It assumes that the view of the person is completely unobstructed and that the person is standing upright in the frame.

The goal of this project is to create a robust system for person detection. This system will be able to handle detection even when a person is partially occluded by obstacles. It will also make no assumptions about the position of the camera relative to the person. By removing the position assumption, the system will function even when running on a mobile platform, such as a UAV, that may be above or behind the person. The system functions in two stages. In the first stage, the system takes depth images and uses this information to classify every pixel in the image as a certain body part, or non-person, to produce a mask image. This output gives a 2-D view of the scene that shows where each body part is in the image. There are many different techniques for producing the mask image, evaluating the effectiveness of each is one step of the project. In the second stage, the depth data will be combined with the mask image to produce a 3-D representation of the person. That representation will be used to find the position of each body part of interest in 3-D space.